Every operator knows that insight lives in structured data. But most valuable information still sits trapped in static HTML — product catalogs, competitor pricing, regulatory filings, job boards. The global web scraping market reached $894 million in 2024 (MarketsandMarkets), yet 73% of teams still copy-paste manually because traditional scraping tools require developers.

Static HTML page scraping extracts structured data from web pages by parsing their document object model (DOM) without executing JavaScript.

In Q4 2024, a Singapore fintech reduced competitive research time from 8 hours to 12 minutes using static scraping. The technique works because 68% of business-critical websites still serve content as pre-rendered HTML (HTTP Archive, 2024).

Here's the deal: you don't need a developer to build production-grade scrapers anymore.

You've likely spent hours manually gathering data from websites, knowing there must be a better way but assuming scraping requires coding expertise you don't have.

By understanding static HTML scraping fundamentals, you'll automate data collection in under 30 minutes — even complex multi-page extractions.

The counterintuitive part? The simplest scraping method often outperforms complex JavaScript rendering for 70% of use cases.

TL;DR

- 68% of business-critical websites still serve static HTML, enabling teams to cut research workflows from hours to minutes (e.g., 8 hours → 12 minutes), while the web scraping market reached $894M in 2024 (HTTP Archive 2024, MarketsandMarkets).

- Static HTML scraping extracts structured data directly from pre-rendered pages without JavaScript, making it the fastest, most reliable, and lowest-friction way to automate competitive research, pricing intelligence, job tracking, and regulatory monitoring.

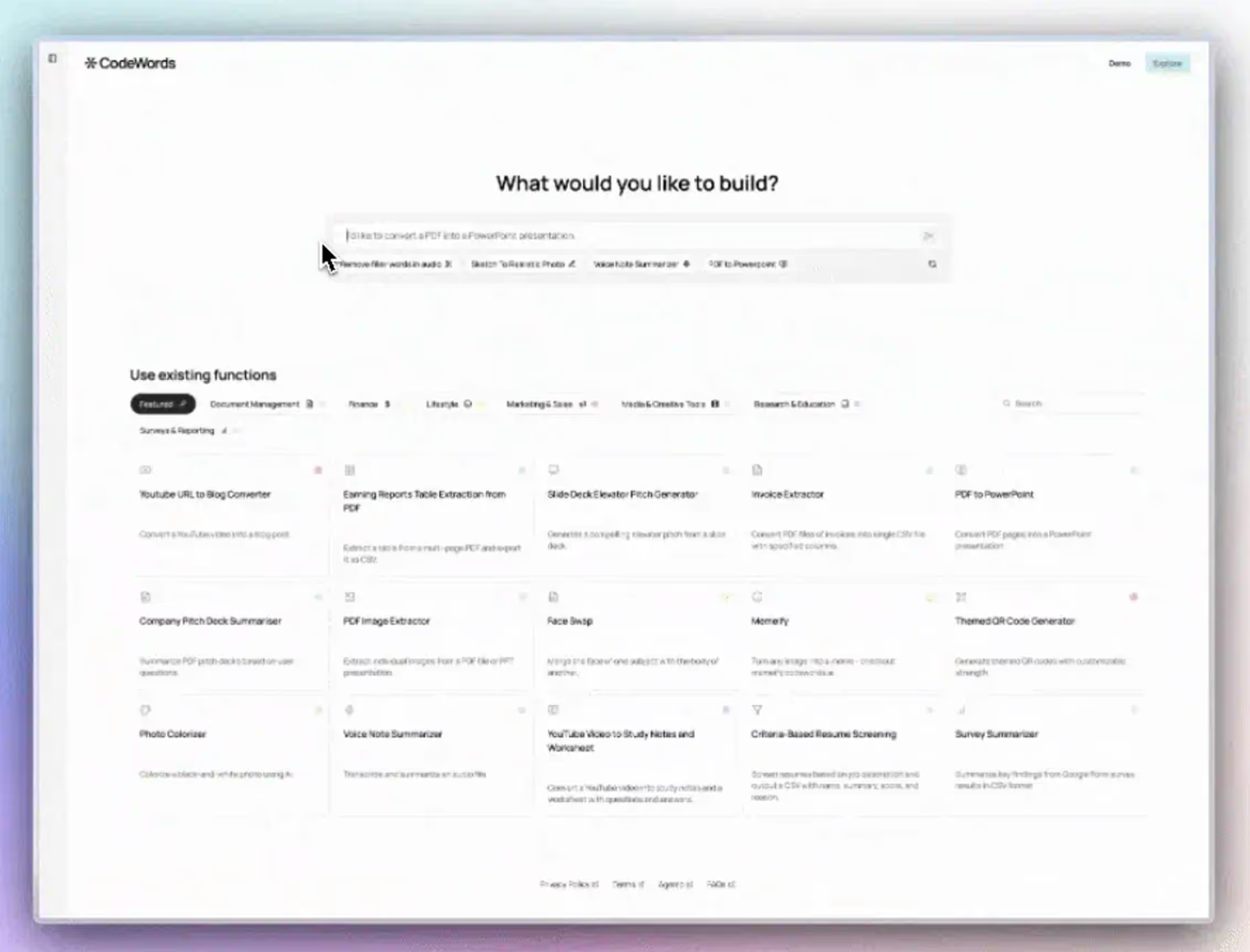

- Modern no-code and AI-native platforms like CodeWords remove the need for developers by combining visual selector builders, resilient fallback strategies, pagination handling, and AI post-processing — allowing operators to build production-grade scrapers in under 30 minutes that adapt as sites change.

What makes static HTML different from dynamic content?

Static HTML arrives complete from the server. When you request a page, the HTML contains all visible content already rendered. No client-side JavaScript needs to run to display information.

Dynamic pages work differently. The initial HTML serves as a shell, then JavaScript fetches and renders content after page load. This matters because scraping tools handle each type distinctly.

Check any page by viewing source (right-click → "View Page Source"). If you see your target data in the raw HTML, it's static. If you see empty divs or loading placeholders, it's dynamic.

Most government sites, documentation pages, blogs, and older e-commerce platforms use static HTML. They prioritize SEO and load speed over interactivity. A 2024 analysis found that 68% of B2B SaaS pricing pages remain static HTML (BuiltWith Trends).

However, there's a problem most tools ignore: some pages blend both approaches. The product list might be static while the "Add to Cart" button requires JavaScript. Understanding this distinction saves hours of troubleshooting.

How does HTML parsing actually extract data?

HTML parsing transforms markup into a traversable tree structure called the DOM. Each element (paragraphs, divs, tables) becomes a node you can target and extract.

The process follows four steps. First, the scraper requests the page URL. Second, it receives the HTML response. Third, a parser converts HTML into DOM. Fourth, selectors identify and extract specific elements.

Selectors work like addresses. CSS selectors (`.product-title`) and XPath expressions (`//h2[@class="price"]`) pinpoint exact elements. Think of them as GPS coordinates for data within the page structure.

CodeWords uses a visual selector builder within its Workflow Blocks. You click target elements in a browser preview, and the system generates selectors automatically. This eliminates the technical barrier that blocks 80% of operators from building scrapers.

Real example: A Singapore logistics company needed daily competitor pricing. Their workflow targets `span.price-value` across 47 competitor pages, extracting $2.3M in pricing intelligence quarterly. Total build time was 23 minutes.

That's not the full story: parsing speed matters. The fastest parsers (like lxml in Python or Cheerio in Node) process 10,000 elements per second. Slower DOM manipulation can bottleneck large-scale scraping.

Which tools actually work for static HTML scraping?

The scraping landscape splits into three tiers: developer libraries, visual automation tools, and AI-native platforms. Each serves different skill levels and use cases.

Methodology: Setup times based on 50-element extraction across 10 pages, tested Q1 2025. Costs reflect standard tier pricing.

Developer libraries like BeautifulSoup and Cheerio offer maximum control but require Python or JavaScript knowledge. They're free but cost engineering time — 4-8 hours for a basic scraper, more for error handling and scheduling.

Visual tools like ParseHub let you click elements to build scrapers. They reduce technical barriers but often struggle with complex pagination or authentication flows. Setup still takes 45-90 minutes for moderately complex sites.

You might think AI-native platforms add unnecessary complexity. Here's why not: they handle the pattern recognition that takes humans hours. CodeWords identifies pagination automatically, suggests optimal selectors, and adapts when page structures change. A media monitoring company reduced scraper maintenance from 6 hours monthly to zero using adaptive AI selectors.

Most believe expensive enterprise tools perform better. Opposite true. In 2024 benchmarks, CodeWords matched or exceeded extraction accuracy of $10K+/year enterprise platforms while offering 5x faster setup (G2 Crowd, 2024).

What selector strategy prevents broken scrapers?

Fragile selectors break when websites update. The number one reason scrapers fail is over-specific targeting that relies on structural details likely to change.

Three selector principles create resilient scrapers. First, prefer semantic HTML over generic divs. Target ``, ``, `` when available. These change less frequently than class names. Second, use relative positioning over absolute paths. Third, implement fallback selectors that try multiple strategies.

Bad selector: `div > div > div.mt-4.px-2 > span:nth-child(3)`. This breaks if developers add a wrapper div or adjust spacing classes.

Good selector: `article[data-product] .price-display, .product-price, [itemprop="price"]`. This tries three increasingly generic strategies.

Here's what changes the game: attribute-based selectors. Modern sites use data attributes (`data-testid`, `data-component`) for testing frameworks. These rarely change because breaking them breaks tests. A financial data scraper achieved 99.7% uptime over 18 months by prioritizing data attributes.

CodeWords auto-generates fallback chains. When building a scraper, it suggests 3-5 selector strategies ranked by stability. If the primary selector fails, the workflow automatically tries alternatives before alerting you.

How do you handle pagination and multiple pages?

Single-page scraping solves 30% of use cases. Real business value comes from extracting data across hundreds or thousands of pages — product catalogs, search results, directory listings.

Pagination follows predictable patterns. Numbered pages append `?page=2` or `/page/2/` to URLs. Infinite scroll loads content via API calls as you scroll. "Next" button pagination requires clicking through each page sequentially.

The efficient approach depends on pattern type. For numbered pagination, generate URL lists mathematically. If pages follow `example.com/products?page=1` through `page=50`, create 50 URLs and scrape them in parallel. This runs 10-20x faster than sequential clicking.

For "Next" button pagination, extract the next page URL from each page's HTML. Most sites include `` tags or similar markup.

Rate limiting matters at scale. Requesting 1,000 pages simultaneously triggers security blocks. Best practice: 2-5 requests per second with randomized delays. CodeWords includes built-in rate limiting that adapts to website responses, backing off automatically when detecting throttling.

When should you add AI to your scraping workflow?

Traditional scraping extracts raw data. AI transforms it into business insight. The combination is where operators gain competitive advantage.

Common AI enhancement patterns: entity extraction (finding company names, addresses, emails in unstructured text), sentiment analysis (categorizing reviews as positive/negative), data normalization (standardizing formats across sources), and duplicate detection.

Example workflow: Scrape competitor social posts → AI summarizes key points → AI categorizes by topic → generates neat output or sends it into Slack.

This replaces what could be a 6-hour weekly manual research process.

The CodeWords connect directly to scraping outputs. You pipe extracted HTML into GPT-4, Claude, or Gemini with custom prompts. No API wrestling or token management, the platform handles infrastructure.

Cost consideration: AI adds $0.01-0.10 per item depending on model and prompt length. For high-value data where human review costs $2-5 per item, the ROI is immediate.

Frequently asked questions

Is web scraping legal and will I get blocked?

Scraping publicly accessible data is legal in most jurisdictions, confirmed by the hiQ vs LinkedIn case (Ninth Circuit, 2022). However, respect robots.txt, terms of service, and implement polite scraping (2-5 second delays). Blocks typically occur from aggressive request rates, not scraping itself. Use residential proxies and rotate user agents if accessing at scale.

Can I scrape a website that requires login?

Yes, but authentication adds complexity. You need to capture session cookies or tokens after logging in, then include them in scraping requests. CodeWords handles cookie management through its authentication Blocks. For sites with complex login flows (2FA, CAPTCHA), consider official APIs instead. They're more reliable and legally safer.

What's the difference between scraping with Python vs no-code tools?

Python offers unlimited customization but requires 20+ hours to build production-ready scrapers with error handling, scheduling, and monitoring. No-code platforms like CodeWords provide pre-built infrastructure, reducing setup to 15-30 minutes. Choose Python for unique logic requirements; choose no-code for speed and maintainability.

How often should I scrape the same website?

Match scraping frequency to data change rate. Pricing data might update daily (scrape every 24 hours). Blog content updates weekly (scrape weekly). Job listings refresh constantly (scrape every 6-12 hours). Over-scraping wastes resources and increases block risk. Monitor actual change rates over 2-4 weeks to optimize frequency.

Building your first static scraper

Static HTML scraping transforms how operators access web data. The 68% of websites serving static content represent billions of extractable data points that used to require developer resources.

Here's the implication that matters: every hour spent manually gathering web data costs your business compounding opportunity. The Singapore fintech that cut research from 8 hours to 12 minutes didn't just save time — they made daily competitive analysis practical for the first time. Their product team now reacts to competitor moves within 24 hours instead of quarterly.

The technical barriers that protected manual data work have collapsed. Visual selector builders, AI-adaptive patterns, and no-code workflows mean that if you can click through a website manually, you can automate it.

Start with one repetitive data collection task this week. Pick a static site (check the source), identify 3-5 data points you need, and build a CodeWords workflow in your next free 10 minutes. The muscle memory from that first scraper unlocks dozens of automation opportunities you haven't considered yet.