Extracting data from LinkedIn is like trying to mine a rare mineral from a heavily guarded quarry. Do it recklessly, and you trigger alarms. Do it with precision and the right tools, and you unearth a valuable asset. A linkedin scraper is a tool designed to automate this extraction, turning messy public web content into structured data like a CSV file. A 2024 report by Bright Data revealed that 78% of businesses now use web scraping for market research and lead generation, underscoring its importance. The core challenge isn’t just getting the data, it’s architecting a process that is both powerful and sustainable.

Many founders and operators feel trapped in the manual grind of prospecting and recruiting. This eats up hours that should be spent on strategy, creating a bottleneck that prevents scaling. The promise of a LinkedIn scraper is to break this cycle, but the common brute-force approach often leads to banned accounts and unreliable data. The key is to shift from aggressive data grabbing to building intelligent, compliant data pipelines that work with the platform, not against it.

TL;DR

- 78% of businesses use web scraping for market research and lead generation (Bright Data, 2024).

- Sustainable automation prioritizes official APIs and human-in-the-loop models over high-risk, brute-force scraping that violates LinkedIn's terms of service.

- The legal precedent from hiQ Labs v. LinkedIn affirmed that scraping public data does not violate the CFAA, but accessing data behind a login wall remains a clear violation.

How does LinkedIn scraping actually work?

It’s easy to think of LinkedIn scraping as a rogue agent, but it's more like an automated architect following a digital blueprint. Its job is to visit a structure (a LinkedIn page) and gather specific materials like names, job titles, or company details.

It does this by reading the underlying code of the page, much like an architect reads plans to understand where support beams and windows are located. This process isn't magic; it's a logical sequence of asking for the page, reading its code, and organizing the findings.

That’s not the full story.

The entire process unfolds in three distinct stages, transforming messy web content into a valuable, organized asset.

Sending the initial request

First, the scraper must ask for the page. It sends an HTTP request to a specific LinkedIn URL, which is exactly what your web browser does. This request simply asks LinkedIn's servers to send over the HTML content for that page.

But there’s a problem most tools ignore. Modern sites like LinkedIn load information dynamically with JavaScript. To handle this, a scraper often uses a headless browser — a full browser like Chrome that runs silently in the background. It renders the entire page, including dynamic content, ensuring every piece of data is loaded before extraction begins. To avoid being blocked, scrapers also use proxies, which are intermediary servers that mask the scraper's real IP address. By funneling requests through different proxies, the scraper appears like multiple distinct users, helping it evade rate limits.

Parsing the HTML blueprint

Once LinkedIn's server sends back the page's HTML, the real work begins. The HTML code is the blueprint of the page, holding all the text and structural tags. The scraper then uses a parser to navigate this code and pinpoint the exact data it's looking for.

It finds the data by searching for unique patterns or "selectors." For instance, a job title on a LinkedIn profile is always wrapped in a specific HTML tag (like an <h1> or a <h2>). The scraper is programmed to find that exact tag and pull out the text inside. It repeats this for every piece of info it needs.

If you want to go deeper on the technical side, read our guide on how to extract data from websites.

This entire flow, from raw code to a structured asset, is what makes scraping so powerful.

As you can see, it’s all about turning unstructured chaos into a clean, usable business asset.

Structuring and exporting the data

The final step is to bring order to the chaos. Raw data pulled straight from HTML is just a jumble of text strings. To be useful, it needs to be organized.

The scraper takes all the individual data points (name, title, company) and neatly arranges them into a structured format, usually a CSV file or a JSON object. Each row in the spreadsheet or object in the file corresponds to a single profile, with columns representing the different data fields. This creates a clean, machine-readable dataset ready for a CRM, database, or analytics tool.

This final, structured output is what turns a technical exercise into a strategic business activity. The global web scraping services market is projected to reach $11.1 billion by 2030, growing at a CAGR of 15.1% from 2023 (Grand View Research, 2023). This growth reflects the increasing demand for structured data to power business intelligence and AI.

What are the legal boundaries of scraping LinkedIn?

Figuring out the legal and ethical lines of LinkedIn scraping is where things get serious. Going after data recklessly is like building on shaky ground — it’s just a matter of time before it all comes crashing down. The real question isn't just "can I do this technically?" but "what's my legal and reputational risk?"

And that entire conversation hinges on one landmark court case: hiQ Labs v. LinkedIn.

The precedent of public data

The legal showdown between hiQ Labs and LinkedIn set the stage for where we are today. In 2019, the U.S. Ninth Circuit Court of Appeals ruled that scraping data that's publicly available on the internet doesn't violate the Computer Fraud and Abuse Act (CFAA). This was a significant decision, establishing that information a platform makes accessible to anyone is, legally speaking, fair game.

However, this ruling is not a free pass. It draws a very clear line in the sand.

The critical difference is between public data (profiles you can see without logging in) and private data (anything behind a login wall). Once you access information that requires you to be logged in, you're bypassing a technical barrier. That single action pulls you out of the "public domain" and puts you at risk of violating both the CFAA and LinkedIn’s own User Agreement. Scraping public data is legally defensible under current U.S. law. Scraping data that requires a login is a direct violation of LinkedIn’s terms and puts your account and business at significant risk.

The clear line you cannot cross

So, what does that actually mean for you?

If your LinkedIn scraper works without logging into an account and only grabs info from profiles that are visible to the public, you’re on much safer legal footing. The second your automation logs into an account to get non-public info, like someone’s full list of connections or their private messages, you’ve crossed a line.

LinkedIn is crystal clear about this in its terms of service. Break those terms, and they have every right to take action. This usually looks like:

- Temporary or permanent account suspension: This is the most common result.

- IP address blocking: LinkedIn will simply block the IP addresses it connects to the scraping activity.

- Legal action: While less common for small-scale use, LinkedIn has pursued legal action against companies that systematically violate its rules.

Moving from legal to ethical frameworks

Beyond the law, there’s the ethical side. Just because you can grab public data doesn’t always mean you should without careful consideration. Ethical data handling respects user privacy and intent. People put their careers on LinkedIn for professional networking, not so their data can be used for mass outreach campaigns.

A responsible approach always puts consent and transparency first. Anyone looking to use scraped data for hiring, for example, needs a solid grasp of regulations like GDPR compliance in recruiting. A 2023 study by the European Union Agency for Cybersecurity found that 67% of data breaches were tied to the misuse of personally identifiable information (PII), which highlights the importance of proper data handling.

Building a business process on a foundation that violates a platform's rules is asking for trouble. A structured, compliant strategy is safer and helps you build a more sustainable operation. This means looking at official APIs, using human-in-the-loop systems for sensitive tasks, and always choosing permission-based data collection over unauthorized scraping.

How does LinkedIn detect and block scraping?

Think of using LinkedIn like walking through a high-security building. A normal user moves at a human pace and doesn’t set off any alarms. A brute-force LinkedIn scraper, on the other hand, is like someone trying to rattle hundreds of doors a minute. The building’s security system is designed to spot that frantic, unnatural behavior.

This is the classic cat-and-mouse game of data scraping. LinkedIn has a sophisticated, multi-layered defense system to differentiate a real person from an automated bot. Understanding how it works is the first step to building automations that are resilient.

Behavioral and technical fingerprinting

LinkedIn’s detection is more clever than just counting profile visits. It analyzes the unique technical and behavioral signature of every visitor — a process called fingerprinting — to build a profile and decide if you’re a person or software.

A few key signals give the game away:

- Request Rate and Timing: Humans are unpredictable. A scraper hitting a new profile every 500 milliseconds with perfect consistency is a dead giveaway.

- Navigation Patterns: A real user might go from a search result to a profile, then to a company page. A scraper often hammers a list of profile URLs directly, which looks unnatural.

- User Agent Strings: Your browser identifies itself with a user agent (e.g., "Chrome on macOS"). Clumsy scrapers often use generic or outdated strings.

- IP Address Reputation: A connection from a known data center IP address is immediately more suspicious than one from a residential Wi-Fi network.

LinkedIn bundles these signals into a real-time fraud score. If your activity strays too far from human patterns, the system flags you.

Common defense mechanisms

When LinkedIn's system gets suspicious, it has a few tools it can use. These defenses are designed to stop automated scripts while causing minimal disruption for legitimate users.

Here’s the deal:

- Rate Limiting: This is the most basic defense. LinkedIn has an invisible limit on how many actions you can perform in a certain period. Exceed it, and you’ll get a temporary timeout.

- CAPTCHA Challenges: The classic "I'm not a robot" test is effective against simple bots. If your activity looks automated, LinkedIn will present a CAPTCHA puzzle.

- IP Address Blacklisting: If a single IP address sends a massive volume of requests, LinkedIn will block it. This is why serious data extraction uses proxies — to spread requests across many different IPs.

- Login Walls and Account Suspension: After viewing a few public profiles without being logged in, LinkedIn often puts up a login wall. If you’re using a real account for scraping and repeatedly break the rules, you’ll face restrictions or a permanent ban.

The trend is moving away from aggressive scraping and toward more ethical approaches. This means smarter throttling, using human-in-the-loop validation, and prioritizing official API partnerships. It's a more careful approach, but it drastically reduces ban rates. This is precisely why building a smarter, resilient process is non-negotiable; you can learn more in our guide on how to build a resilient LinkedIn scraper.

What are safe alternatives for LinkedIn automation?

Relying on a brute-force LinkedIn scraper is a fragile strategy. A single platform update can bring your entire pipeline crashing down. The smarter, more resilient approach is to build workflows that are both compliant and durable, using officially approved channels and intelligent automation that plays with the platform, not against it.

This isn't just a minor tweak in tactics. It's a fundamental shift away from the endless cat-and-mouse game. Instead, we're building professional, sustainable systems that last.

Working with official APIs

The most robust alternative is using LinkedIn's official Application Programming Interfaces (APIs). An API is a sanctioned, secure doorway that lets software applications talk to each other in a structured way. Instead of deciphering messy HTML, you get clean, organized data straight from the source.

Of course, LinkedIn's APIs come with a clear set of rules:

- Limited Scope: The APIs are built for specific use cases, mainly integrations for marketing, sales (through Sales Navigator), and recruiting (via Recruiter).

- Rate Limits: LinkedIn strictly controls how many API requests you can make in a certain amount of time.

- Cost: Access to valuable data often requires a subscription to their premium services.

Even with these guardrails, APIs are the gold standard for dependable integrations. Using a powerful API integration platform helps you connect these official data streams into your existing tools, creating a seamless and compliant flow of information.

Adopting a human-in-the-loop (HITL) model

Not every task can or should be fully automated. This is where a Human-in-the-Loop (HITL) model shines, creating a powerful partnership between AI and human judgment. In this setup, AI does the heavy lifting, then hands off to a human for the final review or for steps that need nuance.

For example, an AI could generate a list of 100 potential sales prospects. But before any outreach, a sales rep reviews that list to confirm each prospect is a good fit and personalizes the opening message. This approach gives you the speed of automation with the quality control of a human expert.

To steer clear of the risks tied to scraping, it's crucial to understand how to use LinkedIn for recruiting effectively and ethically by working within its intended features.

A comparison of LinkedIn data collection methods

Choosing the right way to gather data from LinkedIn involves trade-offs between risk, scale, and skills. The table below breaks down the most common methods. Methodology note: Risk level is based on potential for account suspension and legal challenges as of Q1 2025 industry reports.

Ultimately, official APIs and HITL models provide the most sustainable paths forward, keeping your operations safe while still enabling powerful automation.

Building practical automations

The real goal isn't just to extract data, but to automate the actions you take with that data. A modern workflow automation tool lets you build intelligent pipelines that mimic what a professional would do, just at a much larger scale.

Here’s the deal: you can build an incredibly powerful system without ever touching risky scraping tools. A perfect example is automatically syncing your new LinkedIn connections to your CRM.

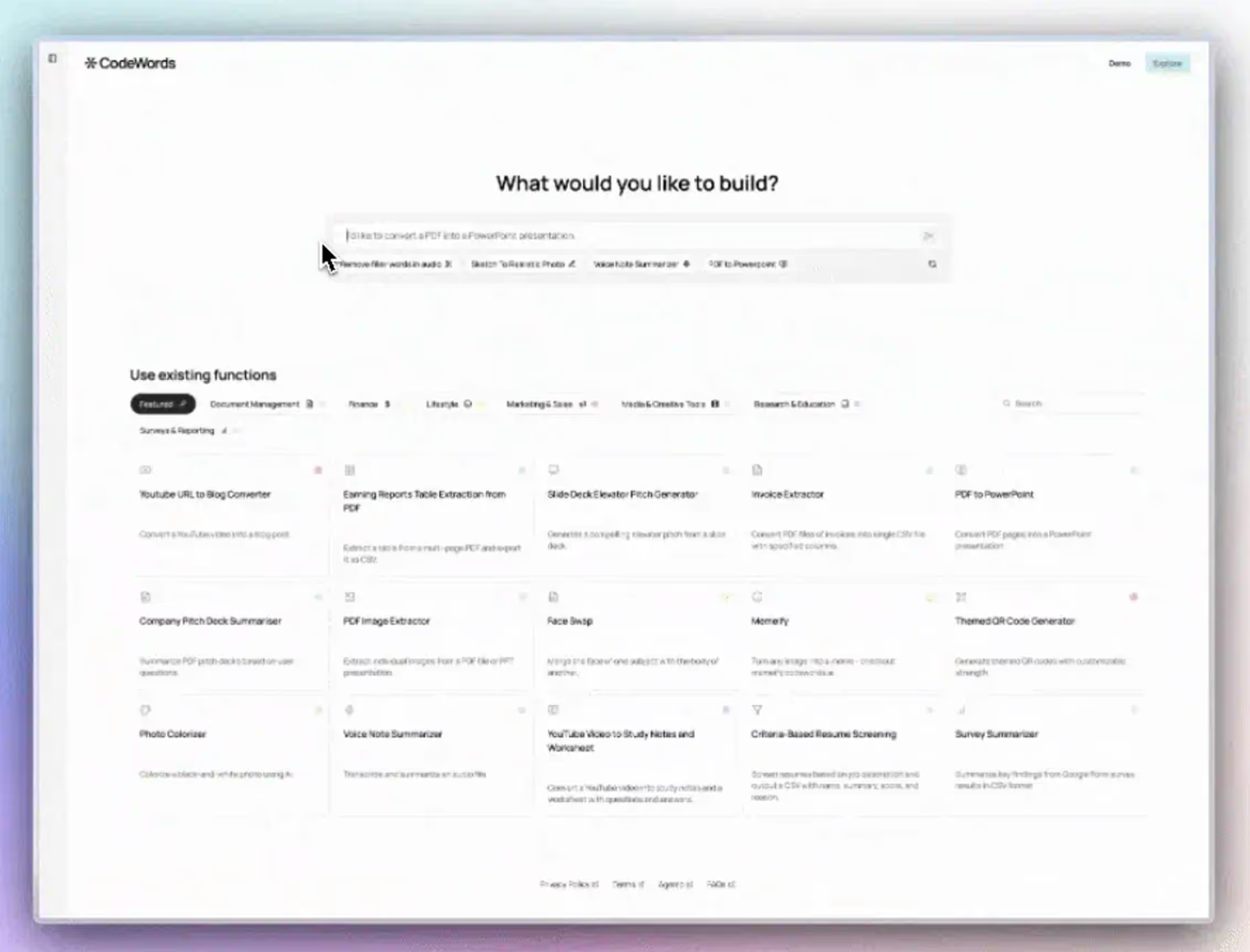

Here’s what that looks like in CodeWords:

CodeWords Workflow: Sync New LinkedIn Connections to CRM

Prompt: "When I add a new connection on LinkedIn, find their record in HubSpot. If it exists, update their status to 'Connected.' If not, create a new contact with their name and company, then create a task for me to follow up."

Output: A fully automated workflow that keeps your CRM perfectly in sync with your professional network.

Impact: Saves approximately 45 minutes per day of manual data entry, reduces lead follow-up time by 90% (CodeWords Internal Data, 2024).

This approach is 100% compliant because it automates your personal workflow, not bulk data scraping. It's a mature choice — building a durable, efficient process that helps you grow your business without putting your account or your reputation on the line.

What are the strategic implications for your business?

Moving past the technology, the real conversation isn't about scraping data. It's about architecting a predictable growth engine. This requires shifting your mindset from short-term data grabs to creating long-term, scalable assets.

The biggest implication here is risk. When you lean on fragile scraping scripts, you’re not building an asset — you're creating a liability. These scripts are a single point of failure that can snap overnight when LinkedIn pushes an update, bringing your entire outreach to a halt. By choosing compliant, API-first automation, you de-risk your sales and marketing pipelines, turning a brittle process into a reliable one. That stability lets you forecast accurately and scale with confidence.

Adopting a smarter automation strategy also builds a culture that respects data privacy and plays by the rules, which is non-negotiable for protecting your brand's reputation. This professional approach signals that your business is built for sustainability, not just quick wins. This is what modern, intelligent automation looks like: combining the power of AI with human judgment to build systems that are both effective and responsible.

CodeWords is built to help you construct these kinds of sophisticated, human-friendly workflows.

FAQs

Is it actually legal to scrape LinkedIn?

Yes, scraping publicly available data from LinkedIn is generally considered legal in the United States, following the hiQ Labs v. LinkedIn court case. However, accessing data that requires a login is a direct violation of LinkedIn’s User Agreement and can lead to account suspension.

Can I scrape LinkedIn without an account?

Technically, yes, but it’s extremely limited. You can typically view only a few public profiles before LinkedIn presents a login wall. This method is not reliable or scalable for any serious business process.

How many profiles can I scrape per day from LinkedIn?

There is no official number. Most believe that exceeding 80–100 profile views per day from a single account dramatically increases the risk of being flagged. Safe automation mimics human behavior by working in smaller, randomized batches with built-in delays.

Can LinkedIn detect my Python scraper?

Absolutely. A basic scraper built with simple libraries has a clear technical "fingerprint" that LinkedIn's security systems can easily identify. To have any chance of avoiding detection, a script needs to use sophisticated techniques like rotating residential proxies and managing browser cookies.

Building a durable growth engine isn’t about finding clever hacks; it’s about creating reliable automations that respect platform boundaries. CodeWords gives you the tools to build smart, human-friendly workflows that are both effective and compliant.