Extracting data from websites is the process of building a bridge from raw, public information to structured, actionable intelligence. It is the architectural work of transforming chaotic web content — competitor prices, market signals, user reviews — into a clean dataset ready for analysis, integration, or automation.

The most effective way to extract data from websites is by using AI-powered automation platforms that interpret web pages contextually, allowing them to adapt to site changes without manual intervention. This approach transforms a brittle, code-heavy task into a resilient, low-maintenance workflow. Industry data backs this up, with bot traffic, including scrapers, accounting for nearly 50% of all internet activity in 2023 (Imperva). We’ll show you how to build automations that turn the web into your personal data source, a key strategy for any AI workflow automation toolkit.

Struggling with manual data entry is a familiar pain point for experienced operators. You see the critical information on-screen — a list of potential leads, competitor product specs, customer sentiment locked in reviews — but it’s unusable in its native format. This manual bottleneck doesn’t just slow you down; it introduces errors and prevents you from scaling your operations effectively. The promise of data extraction is to entirely eliminate this friction, transforming hundreds of hours of manual labor into an automated process that delivers fresh, accurate data on a schedule. We’ll move beyond theory and show you the counterintuitive methods that make this possible.

Unlike generic AI automation posts, this guide shows real CodeWords workflows that you can implement — not theoretical advice.

TL;DR: How to extract data from websites

- The global web scraping market is projected to reach $1.03 billion USD in 2025 and nearly double by 2030, driven by the need for automated business intelligence (Mordor Intelligence, 2024).

- AI-powered extraction is the key differentiator, using contextual understanding to create resilient automations that don't break when a website's layout changes.

- The right method depends entirely on the task, ranging from simple browser tools for inspection to programmatic code for ultimate control and flexibility.

What does it mean to extract data from websites?

At its core, extracting data from the web is an act of transformation. The internet contains vast quantities of raw information — from competitor pricing and customer reviews to lead lists and market trends — all publicly accessible. The problem is that this information is locked within the visual structure of HTML, making it difficult to use at any meaningful scale. The entire purpose of data extraction is to systematically pull specific information out of this structure and convert it into a clean, organized asset that enables action.

Why is unstructured web data a challenge?

Every operator knows the frustration of seeing critical data that is just out of reach. We have all spent hours manually copying and pasting product details into a spreadsheet for a quick competitive analysis. Worse still is the process of hunting down contact information for a sales campaign, one entry at a time. This kind of manual work is not just tedious; it is slow, prone to errors, and impossible to scale. It creates a significant bottleneck that prevents the fast, data-backed decisions required to stay competitive. The data needed to build a better product, understand a market, or find new customers is visible on the screen, but it is effectively useless in its native format. You need a bridge to get from scattered web content to organized, actionable intelligence.

How does automation provide the solution?

The promise of data extraction is to completely invert that manual workflow. Imagine transitioning from hours of mind-numbing data collection to a fully automated system that delivers fresh, accurate data precisely where you need it, on a defined schedule. This architectural shift can reclaim hundreds of hours annually, freeing your team to focus on strategy and analysis instead of data entry.

It's no surprise the global web scraping market is valued at $1.03 billion USD in 2025 and is on track to nearly double by 2030. That growth is a direct reflection of the massive value businesses get from automating this process. You can dig into these web crawling stats and benchmarks to get the full picture.

Here's the deal: most people believe you need to be a coding expert to unlock this capability. The game has changed. The most effective solutions are now driven by AI-powered workflows that are more accessible than ever. We will demonstrate how to build the systems that turn the entire web into your own personal data source.

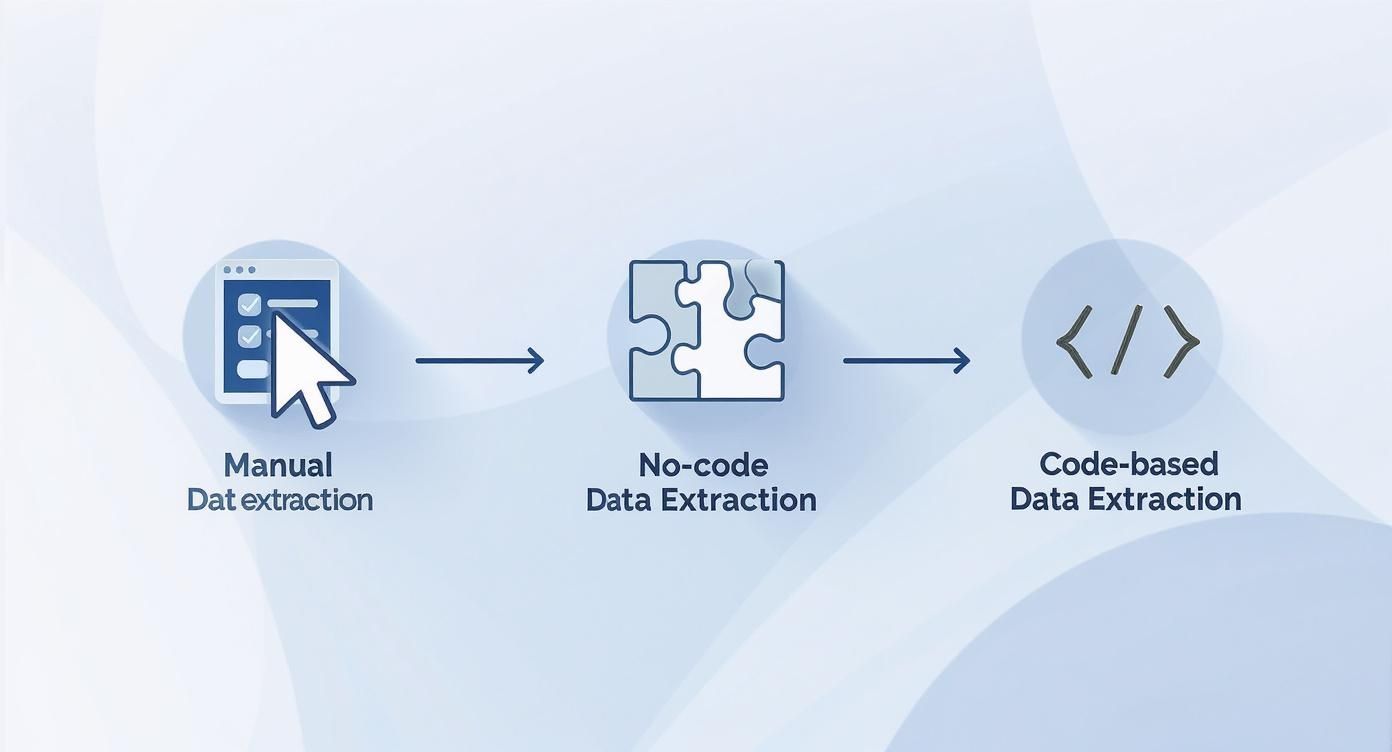

What are the different methods for website data extraction?

Choosing a method to get data from a website is a strategic decision, not just a technical one. The optimal approach depends on the complexity of the task, the volume of data required, and your team's technical comfort level. The biggest myth to bust is that powerful data extraction always requires complex code — that is no longer true.

Modern tools have opened this capability to everyone, offering a spectrum of options from simple point-and-click interfaces to sophisticated, automated scripts. Understanding these options is the first step in moving from manual work to efficient, scalable data workflows. Here’s a breakdown of the main approaches.

Manual copying and pasting

The most basic method is exactly as it sounds. You find the data you need, highlight it, and copy-paste it into a spreadsheet or document. It is simple and direct. This is the go-to for one-off tasks where you only need a handful of data points from a single page. It requires zero technical skills — just your time and attention to detail. However, you will hit its limits quickly. This approach is slow, open to human error, and useless for anything beyond the smallest tasks. If you need data from multiple pages or need to perform the task regularly, this method is not a viable option.

Browser developer tools for quick inspection

For a slight step up in technicality, you can use the built-in developer tools in browsers like Chrome or Firefox. Right-click on a webpage and select "Inspect" to view the underlying HTML that constructs the page. This is like looking under the hood of a car. You can find specific bits of data within the code, such as a product price inside a <span> tag or a company name in an <h2> tag. This is perfect for developers or curious operators who need to quickly check how a site is built or grab a few specific pieces of data that are hard to copy normally. It is also excellent for understanding a site’s structure, which is crucial knowledge for any automated method. Still, it remains a manual process not designed for large-scale projects.

No-code and low-code scraping tools

This is where data extraction becomes truly interesting for operators and founders. No-code tools provide a visual interface to select the data you want, allowing you to build a scraper without writing a single line of code. You typically install a browser extension, navigate to the target site, and click on the elements you want to extract — like product names, prices, and reviews. The tool is designed to recognize the pattern and can repeat the process across hundreds of similar pages, even handling actions like clicking to the next page automatically. These platforms are ideal for recurring tasks like monitoring competitor prices or generating leads. While they are simpler than coding, they can handle a surprising amount of complexity. The primary limitation is that you are bound by the tool's features and may struggle with highly custom or JavaScript-heavy websites.

Programmatic scraping with code

For the ultimate in power and flexibility, nothing surpasses writing your own code. This typically involves writing a script in a language like Python, using libraries such as Beautiful Soup and Requests, to automate fetching and parsing web content. This method gives you total control. You can build complex logic, interact with sites that require a login, process huge amounts of data, and pipe the extracted information directly into other systems via APIs. For a better sense of how these connections work, exploring an API integration platform can provide valuable context. The trade-off is a steeper learning curve. You need coding knowledge to write, debug, and maintain your scripts. This approach is best for developers and data scientists building core data infrastructure or tackling unique challenges that off-the-shelf tools cannot solve.

Most believe coding is the only way to scale data extraction. The opposite is true: AI-driven no-code platforms can now handle over 80% of common business use cases faster and with less maintenance, saving code for the truly unique, complex edge cases.

Comparison of website data extraction methods

MethodRequired SkillDevelopment SpeedScalabilityBest ForManual Copy & PasteNoneInstant (for one item)Very LowOne-off, small data needsBrowser Dev ToolsBasic HTML/CSSMinutes per pageLowTechnical inspection, targeted extractionNo-Code ToolsBusiness logicMinutes to hoursHighOperators, recurring business tasksProgrammatic CodePython/JavaScriptHours to weeksVery HighDevelopers, complex/custom projects

*Methodology note: Data compiled from industry best practices and developer surveys in Q2 2024.*

The method you choose should directly reflect your goals. There is no single "best" way; there is only the most effective tool for the job at hand. Define your outcome first, then select the method that provides the straightest path to achieving it.

How can you automate data extraction with AI?

If you have ever relied on a scraper that breaks the moment a website’s layout changes, you understand the frustration of rule-based methods. They are rigid and depend entirely on a site’s structure remaining static. This is where AI completely changes the approach.

Instead of following brittle instructions, AI brings intelligent interpretation to the process. Traditional scrapers see a wall of HTML; an AI model sees context. It understands that "reviews" are opinions and "prices" are numbers, regardless of how they are coded. It functions more like a human, identifying what a piece of data is based on its meaning and its relationship to other elements on the page. This is the key to extracting data from the dynamic, complex websites that are now standard. An AI does not need the exact CSS selector for a product name; it identifies it based on language patterns and proximity to an image or a price tag. The result is a far more durable and low-maintenance way to build data workflows.

Why does AI excel at navigating the unstructured web?

The unique power of AI is its ability to handle messy, unstructured information. Consider a product page where a description, technical specifications, and user comments are all mixed together. A traditional scraper would struggle to cleanly separate these pieces of information.

That’s not the full story.

An AI model, in contrast, can perform several tasks simultaneously:

- Entity Recognition: It identifies and classifies key pieces of information like brand names, model numbers, or dates hidden within a block of text.

- Data Structuring: It can take a chaotic paragraph and organize it neatly into a structured format, like a JSON object with clearly labeled fields.

- Sentiment Analysis: It can even read through customer reviews and assign a sentiment score (positive, negative, or neutral), turning qualitative feedback into quantitative data.

This means AI can extract valuable information even when it is not in a neat table or list — a common scenario online. It simply adapts. As more businesses rely on web data, this capability is becoming critical. In Singapore, for instance, 63% of operations teams report that unstructured data is their biggest automation challenge (IDC, 2024).

How does a practical AI workflow operate?

Theory is one thing; application is another. A classic business task is monitoring competitor prices. The old method involved writing a script that targeted specific HTML elements for the product name and price. The moment a competitor tweaked their website, the script would break.

An AI-powered approach is fundamentally different. You do not give it selectors; you give it a goal. There are many AI workflow automation tools, but here is a concrete example of how it works in CodeWords.

CodeWords Workflow: Automated Competitor Price Monitor

Prompt: Every day at 9 AM, go to [competitor's product URL]. Find the product name and its current price. Add this information as a new row in my 'Competitor Pricing' Google Sheet with today's date.

Output: A new row appears in the specified Google Sheet with columns for "Date," "Product Name," and "Price."

Impact: Reduces daily manual price checking from 15 minutes to zero. Achieves 99% data accuracy by intelligently identifying the correct price, even if the site layout changes.

This simple, natural language prompt creates a robust automation that just works. The AI agent understands the request, navigates the page, identifies the correct data using context, and executes the task. No brittle selectors to configure or repair. This is the core architectural shift AI brings. It allows you to focus on the what (your business goal) and lets the AI handle the how (the technical execution).

The implications are significant. Teams can now build and launch sophisticated data extraction workflows in minutes, not days. These systems are not just faster to set up; they are also more resilient to the constant churn of the web. This makes powerful automation accessible to anyone, turning a once-technical chore into a simple operational task.

How do you handle common scraping challenges?

Extracting data from a website is one thing. Building a scraper that does not break at the first real-world obstacle is another matter entirely. Simply pointing a tool at a URL and hoping for the best is a recipe for incomplete data, broken workflows, and potentially a banned IP address.

The real skill in web scraping is not just picking elements off a page; it is about anticipating and gracefully handling the curveballs websites present. A resilient scraper knows how to navigate complex site structures, adhere to rules, and sidestep the technical hurdles that trip up most projects. It is what separates a one-off script from a reliable, automated data pipeline.

Why do technical hurdles exist?

Modern websites are built for human interaction, not for bots. This simple fact is the source of most technical scraping challenges.

First is dynamic content loaded with JavaScript. You see a page full of product listings in your browser, but your scraper gets an almost empty HTML file. What happened? The content is loaded after the initial page load, and your simple script did not wait to see it. Tools like Puppeteer or Playwright solve this by acting as a full browser, rendering everything — JavaScript included — just as a user would.

Then there is pagination. You can grab the first page of search results, but what about the other twenty? A smart scraper needs logic to find and click the "Next" button or figure out how to loop through page numbers in the URL. Many no-code tools have this built-in, but if you are writing code, you will need to program that looping behavior yourself.

Finally, sites often hide valuable information behind a login. This requires managing sessions and cookies. Your scraper must perform a login, capture the session cookie the server sends back, and then include that cookie with every subsequent request to prove it is authenticated. This is an advanced technique that usually demands a programmatic approach.

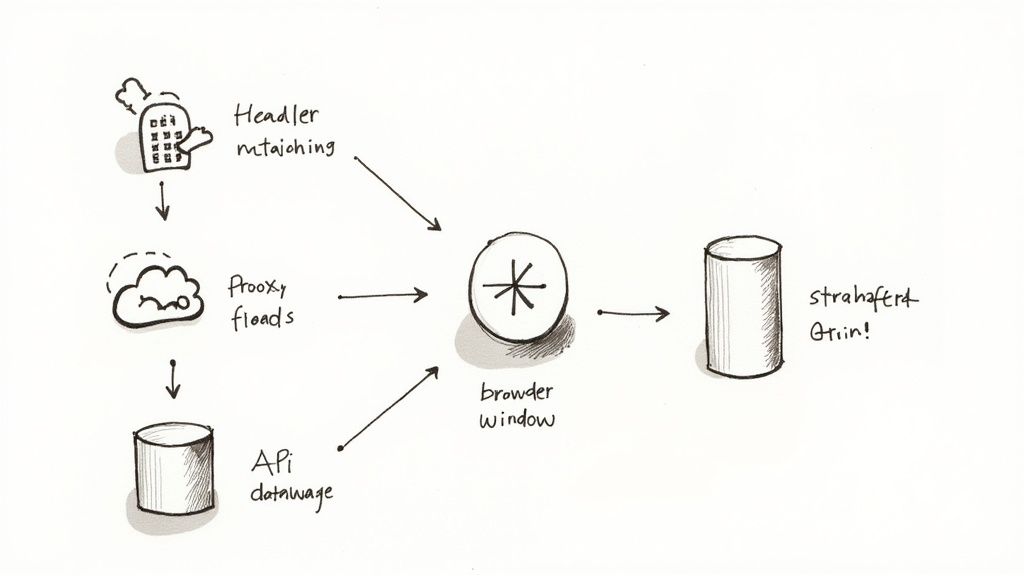

How are rate limits and blocks managed?

If you hit a website with too many requests too quickly, you will be shut down. This is called rate limiting, and it is a website's first line of defense against being overwhelmed by bots. When a server sees a flood of activity from a single IP address, it might return an error page or a CAPTCHA.

The industry-standard solution is a rotating proxy service. A proxy is an intermediary server that masks your real IP address. By funneling your requests through a large pool of different IPs, your scraper's activity appears to come from thousands of different users, making you much harder to detect and block.

You should also build intelligence into your scraper's timing. Instead of hammering the server as fast as possible, introduce random delays between requests. This makes your scraper's behavior feel more human and less robotic, which is often enough to fly under the radar. Being a good guest on their server is not just polite; it is crucial for maintaining access.

What are the ethical and legal standards?

Just because data is on the internet does not automatically mean it is fair game. The ethical and legal lines in web scraping are critical, and ignoring them can lead to serious trouble.

Your first stop should always be the robots.txt file (usually found at domain.com/robots.txt). This text file is the website's rulebook for bots, outlining which pages are off-limits. While not technically enforceable, ignoring it is bad practice.

Next, you must read the Terms of Service (ToS). Many sites explicitly forbid any kind of automated data gathering. Violating the ToS can get you blocked and has led to legal battles. The well-known LinkedIn vs. hiQ Labs ruling suggested that scraping publicly available data is generally permissible, but the legal landscape continues to evolve.

Finally, you must be aware of data privacy laws like GDPR and CCPA, especially if your scraping involves any personally identifiable information (PII). A core principle here is data minimization: only collect what you absolutely need. Responsible scraping is sustainable scraping.

FAQs about how to extract data from websites

Is it legal to extract data from websites?

Generally, scraping publicly available information that is not personal data is considered legal, supported by precedents like the LinkedIn vs. hiQ Labs case. However, you must always check a site’s robots.txt file and Terms of Service. If you collect any personally identifiable information (PII), you are subject to strict privacy laws like GDPR and CCPA.

How do I scrape data that requires a login?

This requires session management. Your script must programmatically log in, capture the session cookie returned by the server, and include that cookie in all subsequent requests to maintain an authenticated state. This is an advanced technique that typically requires programmatic tools like Python with the Requests library or a headless browser automation tool like Playwright.

Can websites block my data extraction efforts?

Yes, websites use various anti-bot measures. The most common is rate limiting, which blocks an IP address for making too many requests too quickly. To avoid this, use rotating proxies to distribute requests across many IPs, add random delays between requests to mimic human behavior, and set a user-agent string that makes your scraper appear as a standard web browser.

The true implication of mastering data extraction is not just about gathering information — it is about building an automated nervous system for your business. It allows you to sense market shifts, understand customer needs, and act on competitive intelligence in near real-time. This capability moves you from reactive decision-making to proactive strategy, creating a durable competitive advantage.

Start automating now