Every founder knows the feeling: spending hours copying competitor pricing, manually checking inventory updates, or tracking review sentiment across dozens of sites. By 2025, web scraping has evolved from a technical novelty into operational infrastructure, yet most guides still treat it like black magic reserved for engineers.

Web scraping extracts structured data from websites automatically, transforming unstructured HTML into usable datasets for analysis, monitoring, or integration. Companies using scrapers report 40-60% time savings on competitive intelligence tasks (McKinsey Digital, 2024).

The counterintuitive truth? You don't need coding skills to start scraping effectively. Modern AI automation platforms have compressed what once required Python expertise into visual workflows that operators can deploy in minutes.

Manual data collection doesn't scale. Copying product details from 50 competitor sites weekly consumes 8-12 hours that could drive strategy instead.

This guide shows how to build your first scraper in under 30 minutes, whether you're monitoring prices, aggregating leads, or tracking content changes — with zero code required.

The best scraping strategy isn't about choosing the "right" tool. It's about understanding when simple extraction beats complex crawling, and how to avoid the legal traps that sink 40% of first-time scrapers (Stanford Internet Observatory, 2023).

TL;DR:

- Web scraping converts unstructured HTML into structured, actionable data, delivering 40–60% time savings on competitive intelligence and research tasks, while enabling teams to monitor prices, inventory, leads, and content changes continuously instead of manually.

- Modern scraping no longer requires coding: visual and AI-native platforms abstract HTML parsing, selector logic, JavaScript handling, and rate limiting into point-and-click workflows that operators can deploy in under 30 minutes.

- The highest-impact scraping strategies focus on simple, repeatable extraction tied directly into business workflows (CRMs, pricing systems, alerts), while respecting legal boundaries like robots.txt, terms of service, and data privacy regulations to avoid blocks and compliance risk.

What exactly is web scraping and why does it matter?

Web scraping pulls specific data elements from web pages programmatically. Think of it as teaching software to read websites the way humans do — identifying patterns in HTML structure, then extracting text, images, prices, or contact details into spreadsheets or databases.

The practical applications span industries. E-commerce teams scrape competitor catalogs to maintain price parity. Recruiters extract LinkedIn profiles matching job criteria. Real estate investors monitor listing sites for undervalued properties. Content teams track brand mentions across review platforms.

Here's what separates effective scraping from busywork: automation frequency. Scraping once replicates manual effort. Scraping hourly or daily creates competitive advantages. A fashion retailer monitoring 200 competitor SKUs every 6 hours spots pricing windows that manual checks miss entirely.

The technical mechanism breaks into three steps: requesting the webpage's HTML, parsing its structure to locate target data, then saving extracted information in structured format. Modern tools abstract this complexity behind visual selectors — you click the element you want, the platform handles the extraction logic.

Singapore-based ops teams report scraping saves 12-15 hours weekly on market research alone (Asia Tech Research, Q2 2024). That time compounds when you're tracking 50+ data sources continuously.

How does web scraping actually work under the hood?

Every webpage consists of HTML — the structural code browsers interpret to display content. When you visit a site, your browser requests this HTML from servers, then renders it visually. Scrapers mimic this process but skip the rendering, reading raw HTML directly.

The extraction relies on selectors: CSS classes, HTML tags, or XPath expressions that pinpoint specific elements. If a product title appears in <h1 class="product-name">, your scraper targets that selector to grab the text inside. Change the selector, extract different data.

However, there's a problem most tools ignore — dynamic content. Modern sites load data via JavaScript after the initial HTML loads. Static scrapers see empty containers where products should be. This requires headless browsers: automated Chrome or Firefox instances that execute JavaScript before extraction.

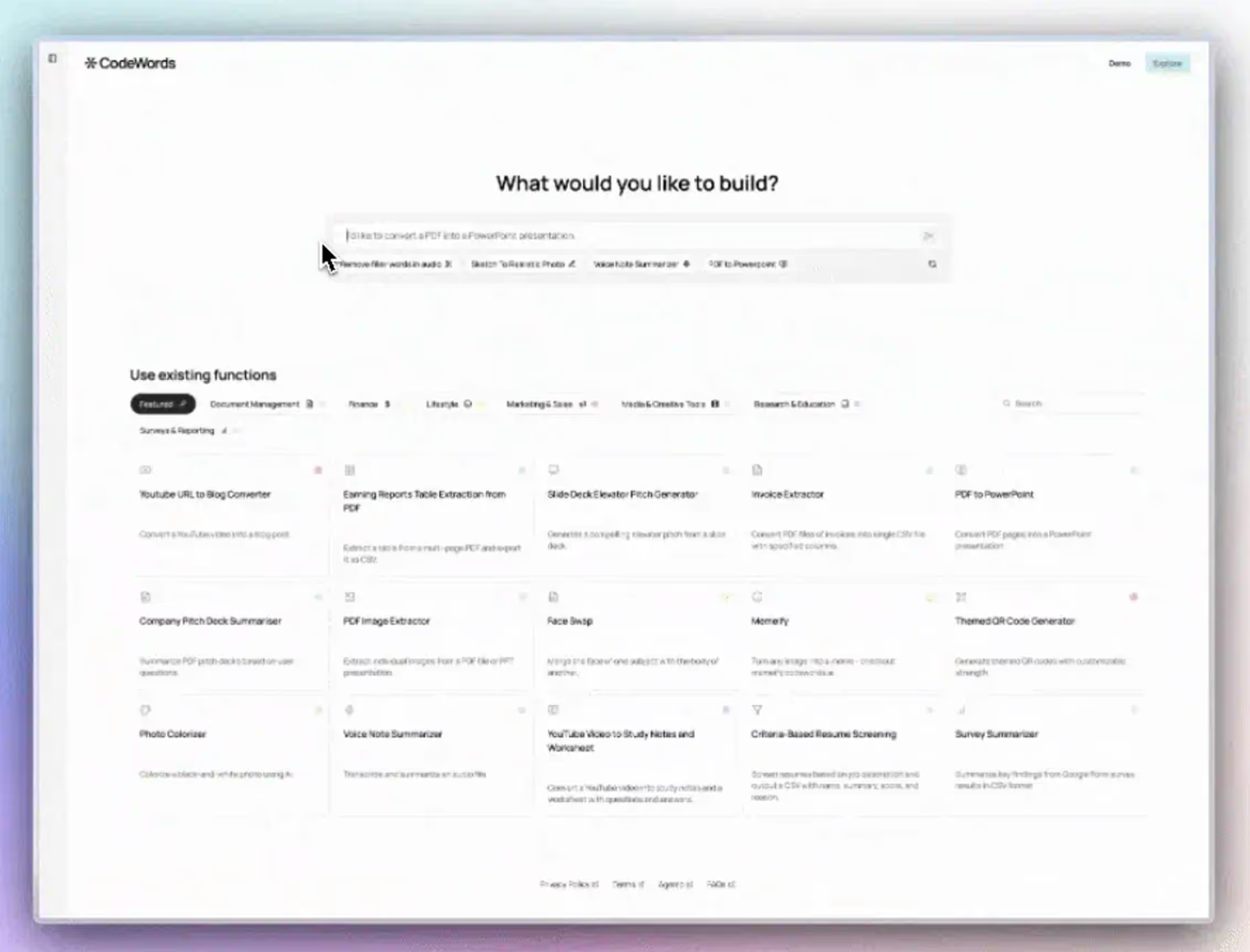

CodeWords handles both static and dynamic scraping through its unified workflow builder. You specify the URL, select elements visually, then choose whether to wait for JavaScript rendering. No switching between tools or learning browser automation APIs.

Rate limiting matters more than technical sophistication. Servers track request frequency from IP addresses. Scraping too fast triggers blocks. Professional scrapers include delays between requests, typically 2-5 seconds, and rotate user agents to appear as different browsers.

The workflow looks like this: HTTP request → HTML response → parse structure → apply selectors → extract data → transform format → store or integrate. Each step handles specific logic, but visual platforms compress them into drag-and-drop blocks.

Which scraping methods should beginners choose?

Choosing scraping approaches depends on site complexity and data volume. Here's how common methods compare for typical use cases:

Methodology: Comparison based on typical operator experience levels, February 2025 pricing surveys across 12 platforms.

Most beginners overestimate complexity. If you're scraping public product listings or contact directories, browser extensions like Data Miner handle 80% of cases. Save advanced methods for sites with login requirements or bot detection.

That's not the full story: the scraping method matters less than workflow integration. Extracting data into spreadsheets creates manual transfer work. The better approach connects scrapers directly to your CRM, inventory system, or analytics dashboard. ScraperAPI and similar services provide pre-built integrations, but you're still bridging tools.

CodeWords eliminates integration friction by combining scraping with transformation and routing in single workflows. Scrape competitor prices → calculate percentage differences → update your pricing sheet → send Slack alerts when competitors undercut you by 10%+. No Zapier glue, no export-import cycles.

What are the legal boundaries of web scraping?

Most believe scraping public data is always legal but actually the opposite is true. Copyright, terms of service, and anti-hacking laws create gray zones that shift by jurisdiction and use case.

The foundational principle: robots.txt files declare which pages sites allow scrapers to access. Ignoring these directives doesn't guarantee legal trouble, but courts reference them in enforcement decisions. Major platforms like LinkedIn and Facebook aggressively pursue scrapers who violate their terms.

The hiQ Labs vs. LinkedIn case (2022) established that scraping publicly available data doesn't violate the Computer Fraud and Abuse Act — but accessing data behind logins or paywalls does. Personal data scraping faces additional GDPR and CCPA restrictions when handling EU or California residents' information.

Here's the deal: commercial use carries higher risk than personal research. Scraping product catalogs for internal price monitoring exists in a safer zone than reselling scraped datasets. Always check terms of service explicitly. 67% of blocked scrapers ignored basic compliance checks (Cloudflare Bot Report, 2024).

You might think anonymizing requests through proxies solves detection issues. Here's why not: sophisticated sites fingerprint browsers through canvas rendering, WebGL parameters, and timing attacks. Rotating IPs without matching browser profiles still triggers flags. Professional scraping requires residential proxies and browser fingerprint randomization.

The pragmatic approach: scrape public data that sites display without login, respect rate limits, identify your bot in user agent strings, and avoid circumventing technical access controls. When in doubt, contact site owners directly, many permit scraping for legitimate business use if you explain your purpose.

How do you build your first scraper in 30 minutes?

Start with a clear extraction goal. Vague objectives like "get all competitor data" lead to bloated scrapers that break when site layouts change. Specific targets like "extract product names, prices, and availability status from Category X" produce maintainable workflows.

Choose a single-page site initially. Multi-page scraping requires pagination logic and state management that complicates debugging. Master extracting data from one product page before scaling to entire catalogs.

The visual selector method works like this: navigate to your target page, right-click the data element (product title, price, etc.), select "Inspect" to view HTML structure, note the CSS class or ID, then configure your scraper to target that selector. Chrome DevTools makes selector identification straightforward for non-developers.

Test extraction on 3-5 sample pages before automating. Site structures vary subtly. What works on one product page might break on another due to missing optional fields. Handle null values explicitly to prevent workflow failures.

CodeWords Workflow Blocks for basic scraping include: HTTP Request to fetch page HTML, Parse HTML to apply selectors, Transform Data to clean extracted text, and Write to Sheet or Send to Webhook for output. Chain these blocks, configure selectors visually, then schedule execution frequency.

Common first-scraper mistakes: scraping too frequently (triggers IP bans), not handling missing elements (causes crashes), ignoring pagination (collects incomplete datasets), and skipping validation (propagates extraction errors). Build incrementally and verify output quality before scaling volume.

When should you avoid scraping entirely?

APIs beat scraping when they exist. Official APIs provide structured data without parsing HTML, include documentation, and rarely break from layout changes. Check for public APIs before building scrapers — sites like Crunchbase, Yelp, and most e-commerce platforms offer developer access.

Pre-built datasets from Data.world or industry providers often cost less than maintaining scrapers. Competitive intelligence services aggregate data professionally, handling legal compliance and quality assurance. Scraping makes sense when your data needs are unique or timing-sensitive.

Sites with aggressive bot detection — think major airlines, ticket platforms, or financial sites — require sophisticated proxy networks and browser automation that exceed beginner capabilities. These scenarios need dedicated scraping infrastructure or commercial services.

The calculus shifts at scale. Scraping 10,000+ pages daily requires managing distributed scrapers, handling rate limits across IP pools, and monitoring for structural changes. At this volume, evaluate whether buying data or partnering with aggregators reduces operational overhead.

Legal uncertainty in your jurisdiction or industry warrants caution. Healthcare and financial services face stricter data handling requirements. When compliance risk outweighs data value, manual collection or official partnerships provide safer alternatives.

Frequently asked questions

Is web scraping actually legal if the data is public?

Legality depends on how you scrape and what you do with data. Accessing public pages respecting robots.txt generally stays legal, but violating terms of service, bypassing access controls, or misusing personal data creates liability regardless of public availability.

Can I scrape without knowing how to code at all?

Yes, modern platforms like CodeWords, Octoparse, and ParseHub use visual interfaces where you point-and-click target elements. Basic scrapers require zero coding; advanced customization benefits from light JavaScript knowledge but isn't mandatory.

How can I tell if a website will block my scraper?

Check robots.txt at domain.com/robots.txt for disallowed paths, review terms of service for scraping clauses, and test with slow initial requests while monitoring for 403/429 HTTP errors. Sites with login requirements or heavy JavaScript typically implement detection.

What's the difference between web scraping and web crawling anyway?

Crawling discovers and indexes pages across sites (like search engines do), while scraping extracts specific data from known pages. Crawlers follow links to map site structures; scrapers target defined elements to collect information. Most business use cases need scraping, not crawling.

From manual collection to automated intelligence

Web scraping transforms how operators access market data, but only when extraction connects to action. The real leverage isn't collecting competitor prices; it's automatically adjusting your pricing strategy when market conditions shift.

Starting simple beats waiting for perfect solutions. A basic scraper monitoring 20 competitor products weekly provides more intelligence than quarterly manual audits. Scale complexity as needs grow, not before.

The implication extends beyond data collection. Organizations building scraping competency gain systematic advantages in market visibility, competitive response speed, and research efficiency. These capabilities compound as you connect scraped data to analytics, notifications, and decision workflows.

Explore CodeWords workflows that combine scraping, transformation, and intelligent routines.